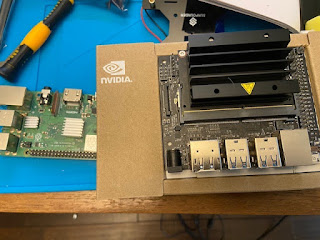

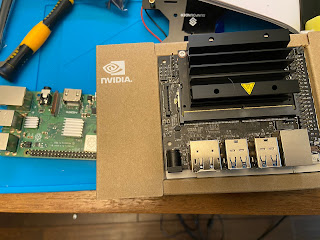

I attended re:Invent 2019 at Las Vegas, NV and one of the vendors, Onica, had a chance to win their IoTanium Dev Kit by (1) making motions and capturing that motion; (2) upload the captured motion to a ML algorithm to (3) generate a model with the captured motion and associate that with movement and action to then (4) play a game and make motions / actions that would move an avatar in the game. So after 3 tries, I finally completed the exercise. I was most likely not consistent in my motions and it was hard for the model to understand what I was trying to accomplish. But I did it! :) As a result, I received a t-shirt and their IoTanium Dev Kit. I thought in this post I'd capture the unboxing of the Dev Kit and then in subsequent blog posts, show some projects that can be completed with this Dev Kit. The Dev Kit comes in a basic black box, filled with parts and electronic bits for assembly. Upon opening the box, I am greeted immediately with instructions for c